Ken Hewitt has written a wonderful article for Scientific American entitled "Gifts and Perils of Landslides", in which he examines the inter-relationship between the development of society and the occurrence of landslides in the Upper Indus valleys. Ken is the guru of high mountain landslides in Pakistan, having spent many field seasons mapping rock avalanche deposits in the remote upper valleys of the Hindu Kush. The article is available online at the following link:

http://www.americanscientist.org/issues/feature/2010/5/gifts-and-perils-of-landslides

His key point is that these giant landslides create both destruction and benefits to humans in this very rugged topography, the latter because they create terrain that is fertile (e.g. on lake beds formed behind landslide dams) and less steep.

The piece will be accompanied in due course by a slideshow, which will be online here. This is not yet available.

Meanwhile, the Pamir Times has an image of restarted works on the Attabad landslide, the aim of which is to widen and then, I understand, to deepen the spillway:

Showing posts with label research. Show all posts

Showing posts with label research. Show all posts

Monday, September 20, 2010

Saturday, November 28, 2009

The link between rainfall intensity and global temperature

One of the most interesting aspects of the global landslide database that we maintain at Durham is the way in which it has highlighted the importance of rainfall intensity in the triggering of fatal landslides. Generally speaking, to kill people a landslide needs to move quickly rapid, and rapid landslides appear to be primarily (but note not always) triggered by intense rainfall events (indeed in the reports the term "cloudburst" often crops up). So, a key component of trying to understand the impacts of human-induced global climate change on landslides is the likely nature of changes in rainfall intensity, rather than that of rainfall total. Put another way, it is possible that the average annual rainfall for an area might decrease but the occurrence of landslides increase if the rainfall arrives in more intense bursts.

There is of course a certain intuitive logic in the idea that rainfall intensity might increase with temperature. Warmer air is able to hold more moisture (as anyone who has been in the subtropics in the summer will know only too well!) and of course increased temperatures also drive greater convection, responsible for thunderstorm rainfall. Of course this is a very simplistic way to look at a highly complex system, so it is not enough to rely upon this chain of logical thought. However, until now there have been surprisingly few studies to actually quantify whether there is a relationship between global temperature and precipitation intensity, which has meant that for landslides understanding the likely impact of climate change has been quite difficult.

However, an important and rather useful paper examining exactly this issue has sneaked under the radar in the last few months. The paper, by Liu et al (2009) (see reference below), was published in Geophysical Research Letters a couple of months ago. The paper uses data from the Global Precipitation Climatology Project (GPCP). These data can be accessed online here (so no claims that climate scientists don't publish their data, please!) The dataset provides daily rainfall totals for 2.5 x 2.5 degree grid squares across the globe, extending back almost 50 years. Liu et al. (2009) looked at the data from 1979 to 2007, comparing precipitation density with global temperature in this time period.

Their results are both unsurprising and surprising. The unsurprising part is that they found that the occurrence of the most intense precipitation events does increase with temperature. The surprising part is the magnitude of the change - they found that a 1 degree Kelvin (Centigrade) increase in global temperature causes a 94% increase in the most intense rainfall events, with a decrease in the moderate to light rainfall events. Indeed the median rainfall increased from 4.3 mm day−1 to 18 mm day−1, which is a surprisingly high shift as well.

So why is this important in the context of landslides? Well, I think that there are probably two key implications:

1. It has long been speculated that anthropogenic warming will lead to an increase in landslides, but with little real quantitative evidence to confirm or deny this. The demonstration that higher global temperatures does lead to increased precipitation intensity starts to put some meat on the bones of this idea. Furthermore, if it is possible to directly link rainfall intensity to landslide occurrence (and there is some evidence both from my own work and from that of others that this may be possible), then it should be possible to start to examine the likely increase in landslides as warming proceeds.

2. The current global climate models assume a much lower increase overall in precipitation intensity with increasing temperature than Liu et al. (2009) suggest. Indeed most of the models assume about a 7% increase per degree Kelvin (Centigrade) warming. For the most intense precipitation events this means that the models predict about a 9% increase, which is an order of magnitude lower Liu et al. (2009) found. This suggests that the rainfall projections that are derived from the models are probably overly-conservative, and possibly very much so, which is a concern. If so, then forecasts of landslide occurrence that are derived from these models are likely to under-estimate the true impact.

Of course, this is only one study, and it should also be noted that the most intense rainfall events are usually associated with tropical areas and with those in the path of hurricanes and in particular typhoons. There is a great deal more work to do on this topic, but the initial results provide real cause for concern.

Reference

Liu, S., Fu, C., Shiu, C., Chen, J., & Wu, F. (2009). Temperature dependence of global precipitation extremes Geophysical Research Letters, 36 (17) DOI: 10.1029/2009GL040218

Tuesday, November 3, 2009

The Willis Research Network - the world's most important hazard and risk collaboration?

Willis is a large insurance and reinsurance broker based in London. A key part of their primary business lies in arranging insurance for catastrophe risk - i.e. losses from mega-events such as an earthquake in Tokyo or San Francisco, a storm surge flood in London or a volcanic eruption near to Mexico City. Calculating the risks associated with these events is a challenging task, but of course the stakes are high as large events can induce catastrophic losses. Parts of the reinsurance industry was badly burnt by Hurricane Katrina for example. So, in order to calculate the risks and potential losses reinsurance companies use Catastrophe Models (usually called Cat Models), which are complex simulations of the impacts of large events. Getting these models to sensibly estimate potential losses is difficult - and of course requires a good knowledge of the science of the hazard in question.

A few years ago Willis approached one of my colleagues, Prof. Stuart Lane, and I to see if we would be interested in joining a research network that Willis would support. The idea was to bring together key parts of the reinsurance industry and top academic researchers on hazards and risk in order to improve the ways in which risk is modelled and handled in the insurance industry. And so the Willis Research Network was born. Initially the network consisted of seven very carefully chosen UK academic institutions - Durham, Bristol, Reading, City, Cambridge, Exeter and Imperial. Membership of the network is by invitation only, and active participation is ensured through the sponsorship by Willis of a research fellow in each institution.

A few years on, and the network is now an extraordinary entity. That initial group of seven has been joined by universities from the USA (e.g. Princeton and NCAR), Japan (e.g. Kyoto), Italy (e.g. Bologna), Germany (e.g. GFZ Potsdam) and Australia (e.g. SEA). I am pretty sure that it is now the largest and most dynamic academic-industrial hazard and risk network in the world, and it is generating some amazing research.

In the last couple of days the network announced associate membership of ten new institutions, including the British Geological Survey, the UK Met Office, The UK National Oceanography Centre, the Ordnance Survey and GNS Science in New Zealand. Research now spans natural perils, visualisation, social dislocation and financial management. I suspect that over the next few years this network will come to dominate research into catastrophic risk management. The publications section is well worth a look, not least because there are some very useful background presentations there from some of the world's top hazards researchers.

A few years ago Willis approached one of my colleagues, Prof. Stuart Lane, and I to see if we would be interested in joining a research network that Willis would support. The idea was to bring together key parts of the reinsurance industry and top academic researchers on hazards and risk in order to improve the ways in which risk is modelled and handled in the insurance industry. And so the Willis Research Network was born. Initially the network consisted of seven very carefully chosen UK academic institutions - Durham, Bristol, Reading, City, Cambridge, Exeter and Imperial. Membership of the network is by invitation only, and active participation is ensured through the sponsorship by Willis of a research fellow in each institution.

A few years on, and the network is now an extraordinary entity. That initial group of seven has been joined by universities from the USA (e.g. Princeton and NCAR), Japan (e.g. Kyoto), Italy (e.g. Bologna), Germany (e.g. GFZ Potsdam) and Australia (e.g. SEA). I am pretty sure that it is now the largest and most dynamic academic-industrial hazard and risk network in the world, and it is generating some amazing research.

In the last couple of days the network announced associate membership of ten new institutions, including the British Geological Survey, the UK Met Office, The UK National Oceanography Centre, the Ordnance Survey and GNS Science in New Zealand. Research now spans natural perils, visualisation, social dislocation and financial management. I suspect that over the next few years this network will come to dominate research into catastrophic risk management. The publications section is well worth a look, not least because there are some very useful background presentations there from some of the world's top hazards researchers.

Thursday, September 3, 2009

On the dangers of Rhododendrons!

However, it might surprise you to hear that they can be a major cause of landslides. As the image below shows, rhododendrons are increasingly grown on the mountain slopes of the Appalachians:

As well as creating a somewhat beautiful landscape, rhododendrons have been grown in the Appalachians as a result of logging and fire suppression policies. Forest fires have long been perceived as a major hazard, and fire-exposed land is highly prone to landslides (a major fear in California given the fires in this El Nino year). The Appalachians have a long landslide history - in 1969 for example heavy rainfall associated with the passage of the remnants of a hurricane triggered 3700 debris flows, causing 150 fatalities and $116 million of economic losses.

In a recently published paper, Tristan Hales (now at Cardiff University) and colleagues (2009) looked at the role of roots in providing strength to the soil in the Appalachians. The results are quite interesting. It is clear that in many Appalachian slopes the key thing that prevents landslides is the strength provided to the soil by the roots of the trees and shrubs. Therefore, anything that causes a reduction in this strength will lead to an increased chance of landslides. Hales et al. (2009) set out to measure the strength provided by different plants and trees in plots on slopes in the Appalachians. They found that different varieties of trees had broadly similar root strengths, but that for the native rhododendron species was markedly lower. Furthermore, the roots tend to be concentrated in the upper layers of the soil (tree roots extend much deeper), the rhododendrons are less effective at removing and transpiring water from the soil than are trees, and the thick bushy vegetation starves the forest floor of light, which prevents tree sapling growth.

All of this is of course bad news in terms of landslides. Hales et al. (2009) are keen to stress that this should not be seen as a definitive indication that rhododendrons are responsible for landslide initiation in the Appalachians, but they do note that in the last large landslide event, in 2004, many of the landslides were initiated in thickets of rhododendrons.

Reference

Hales, T., Ford, C., Hwang, T., Vose, J., & Band, L. (2009). Topographic and ecologic controls on root reinforcement Journal of Geophysical Research, 114 (F3) DOI: 10.1029/2008JF001168

Wednesday, June 3, 2009

Are satellite-based landslide hazard algorithms useful?

In some parts of the world, such as the Seattle area of the USA, wide area landslide warning systems are operated on the basis of rainfall thresholds. These are comparatively simple in essence - basically the combination of short term and long term rainfall that is needed to trigger landslides is determined, often using historical records of landslide events. A critical threshold is determined for the combination of these two rainfall amounts - so for example, it might require 100 mm of rainfall in hours after a dry spell, but 50 mm after a wet period. These threshold rainfall levels have been determined for many areas; indeed, there is even a website dedicated to the thresholds!

In 1997 NASA and JAXA launched a satellite known as TRMM (Tropical Rainfall Monitoring Mission), which uses a suite of sensors to measure rainfall in the tropical regions. Given that it orbits the Earth 16 times per day most tropical areas get pretty good coverage. A few years ago Bob Adler, Yang Hong and their colleagues started to work on the use of TRMM for landslide warnings using a modified version of rainfall thresholds. Most recently, this work has been developed by Dalia Bach Kirschbaum - and we have all watched the development of this project with great interest. The results have now been published in a paper (Kirschbaum et al. 2009) in the EGU journal Natural Hazards and Earth Systems Science - which is great because NHESS is an open access journal, meaning that you can download it for free from here.

Of course a rainfall threshold on its own doesn't tell you enough about the likelihood of a landslide. For example, it doesn't matter how hard it rains, if the area affected is in a flat, lowland plain then a landslide is not going to occur. To overcome this, the team generated a simple susceptibility index based upon weighted, normalised values of slope, soil type, soil texture, elevation, land cover and drainage density. The resulting susceptibility map is shown below, with landslides that occurred in 2003 and 2007 indicated on the map:

A simple rainfall threshold was then applied as shown below:

Thus, if an area is considered to have high landslide susceptibility and to lie above the threshold line shown above based upon an analysis using 3-hour data from TRMM, then a warning can be issued.

Thus, if an area is considered to have high landslide susceptibility and to lie above the threshold line shown above based upon an analysis using 3-hour data from TRMM, then a warning can be issued.

Kirschbaum et al. (2009) have analysed the results of their study using the landslide inventory datasets shown in the map above. Great care is needed in the interpretation of these datasets as they are derived primarily from media reports, which of course are heavily biased in many ways. Examination of the map above does show this - look for example at the number of landslide reports for the UK compared with New Zealand. The apparent number is much higher than in NZ, even though the latter is far more landslide prone. However, in New Zealand the population is small, the news media is lower profile, and landslides are an accepted part of life. However, so long as one is aware of these limitations then this is a reasonable starting point for analysing the effectiveness of the technique.

So, how did the technique do? Well, at a first look not so well:

In many cases the technique failed to forecast many of the landslides that actually occurred, whilst it also over-forecasted (i.e. forecasted landslides in areas in which there were none recorded) dramatically. However, one must bear in mind the limitations of the dataset. It is very possible that landslides occurred but were not recorded, so at least to a degree the real results are probably better than the paper indicates. Otherwise, the authors admit that the susceptibility tool is probably far too crude and the rainfall data to imprecise to get the level of precision that is required. However, against this one should note that the algorithm does very well (as indicated by the green pixels on the map above) in some of the key landslide-prone areas - e.g. along the Himalayan Arc, in Java, in SW India, the Philippines, the Rio de Janeiro area, parts of the Caribbean, and the mountains around the Chengdu basin. In places there is marked under-estimation - e.g. in Pakistan, Parts of Europe and N. America. In other places there was dramatic over-estimation, especially in the Amazon Basin, most of India, Central Africa and China.

All of this suggests that the algorithm is not ready for use as an operational landslide warning system. Against that though the approach does show some real promise. I suspect that an improved algorithm for susceptibility would help a great deal (maybe using the World bank Hotspots approach), perhaps together with a threshold that varies according to area (i.e. it is clear that the threshold rainfall for Taiwan is very different to that of the UK). Kirschbaum et al. (2009) have have produced a really interesting piece of work that represents a substantial step along the way. One can only hope that this is developed further and that, in due course, an improved version of TRMM is launched (preferably using a constellation of satellites to give better temporal and spatial coverage). That would of course be a far better use of resource than spending $4,500 million on the James Webb Space Telescope.

Reference

Kirschbaum, D. B., Adler, R., Hong, Y., and Lerner-Lam, A. 2009. Evaluation of a preliminary satellite-based landslide hazard algorithm using global landslide inventories. Natural Hazards and Earth System Science, 9, 673-686.

In 1997 NASA and JAXA launched a satellite known as TRMM (Tropical Rainfall Monitoring Mission), which uses a suite of sensors to measure rainfall in the tropical regions. Given that it orbits the Earth 16 times per day most tropical areas get pretty good coverage. A few years ago Bob Adler, Yang Hong and their colleagues started to work on the use of TRMM for landslide warnings using a modified version of rainfall thresholds. Most recently, this work has been developed by Dalia Bach Kirschbaum - and we have all watched the development of this project with great interest. The results have now been published in a paper (Kirschbaum et al. 2009) in the EGU journal Natural Hazards and Earth Systems Science - which is great because NHESS is an open access journal, meaning that you can download it for free from here.

Of course a rainfall threshold on its own doesn't tell you enough about the likelihood of a landslide. For example, it doesn't matter how hard it rains, if the area affected is in a flat, lowland plain then a landslide is not going to occur. To overcome this, the team generated a simple susceptibility index based upon weighted, normalised values of slope, soil type, soil texture, elevation, land cover and drainage density. The resulting susceptibility map is shown below, with landslides that occurred in 2003 and 2007 indicated on the map:

A simple rainfall threshold was then applied as shown below:

Thus, if an area is considered to have high landslide susceptibility and to lie above the threshold line shown above based upon an analysis using 3-hour data from TRMM, then a warning can be issued.

Thus, if an area is considered to have high landslide susceptibility and to lie above the threshold line shown above based upon an analysis using 3-hour data from TRMM, then a warning can be issued.Kirschbaum et al. (2009) have analysed the results of their study using the landslide inventory datasets shown in the map above. Great care is needed in the interpretation of these datasets as they are derived primarily from media reports, which of course are heavily biased in many ways. Examination of the map above does show this - look for example at the number of landslide reports for the UK compared with New Zealand. The apparent number is much higher than in NZ, even though the latter is far more landslide prone. However, in New Zealand the population is small, the news media is lower profile, and landslides are an accepted part of life. However, so long as one is aware of these limitations then this is a reasonable starting point for analysing the effectiveness of the technique.

So, how did the technique do? Well, at a first look not so well:

In many cases the technique failed to forecast many of the landslides that actually occurred, whilst it also over-forecasted (i.e. forecasted landslides in areas in which there were none recorded) dramatically. However, one must bear in mind the limitations of the dataset. It is very possible that landslides occurred but were not recorded, so at least to a degree the real results are probably better than the paper indicates. Otherwise, the authors admit that the susceptibility tool is probably far too crude and the rainfall data to imprecise to get the level of precision that is required. However, against this one should note that the algorithm does very well (as indicated by the green pixels on the map above) in some of the key landslide-prone areas - e.g. along the Himalayan Arc, in Java, in SW India, the Philippines, the Rio de Janeiro area, parts of the Caribbean, and the mountains around the Chengdu basin. In places there is marked under-estimation - e.g. in Pakistan, Parts of Europe and N. America. In other places there was dramatic over-estimation, especially in the Amazon Basin, most of India, Central Africa and China.

All of this suggests that the algorithm is not ready for use as an operational landslide warning system. Against that though the approach does show some real promise. I suspect that an improved algorithm for susceptibility would help a great deal (maybe using the World bank Hotspots approach), perhaps together with a threshold that varies according to area (i.e. it is clear that the threshold rainfall for Taiwan is very different to that of the UK). Kirschbaum et al. (2009) have have produced a really interesting piece of work that represents a substantial step along the way. One can only hope that this is developed further and that, in due course, an improved version of TRMM is launched (preferably using a constellation of satellites to give better temporal and spatial coverage). That would of course be a far better use of resource than spending $4,500 million on the James Webb Space Telescope.

Reference

Kirschbaum, D. B., Adler, R., Hong, Y., and Lerner-Lam, A. 2009. Evaluation of a preliminary satellite-based landslide hazard algorithm using global landslide inventories. Natural Hazards and Earth System Science, 9, 673-686.

Tuesday, May 19, 2009

To explore or to research? That is not the question.

Some readers will be aware that there has been a rumpus during the last few months at the Royal Geographical Society (RGS-IBG) over its policy not to organise and run its own expeditions. A small but influential group (the Beagle Campaign) petitioned for, and got, a Special General Meeting of the Fellows of the Society, culminating in a vote on the issue, on Monday. The resolution, which was opposed unanimously by members of the Council, was defeated (but the vote was quite close on a large turn-out), meaning that the current policy is retained, although this policy was always scheduled to be reviewed over the next year.

You may wonder why I am posting on this issue on a landslide blog? Well, I am an Honorary Secretary of the RGS-IBG, and thus am a member of the Council. In the months leading up to the vote I have been careful not to comment in any public forum on the issue - I felt that in the interests of fairness it was best to remain silent (whilst encouraging all Fellows, regardless of their views of the resolution, to vote) - but now that the vote is over I will explain why I was opposed to the resolution. I write only from absolutely personal perspective - I was in Hong Kong on the day of the vote and have been here since. I have not discussed the vote with any other Council members, and I most certainly do not write on behalf of the Society.

First and foremost, the RGS-IBG has a long and proud tradition of fieldwork. I am a great advocate of field research - my early career was to a large degree built upon it - and I strongly believe that there is a great deal to learn from fieldwork that cannot be learnt from lab studies or from models. The key question therefore is not whether field research should be undertaken (every member of the Council is resolute about the importance of field research), but how should it be organised and funded. I guess in a sense this is where the Beagle Campaign followers and I disagree. They argue, passionately and impressively, that it is best undertaken through large, reasonably long-term expeditions. I argue that it is best undertaken through larger numbers of smaller scale, focused studies. Let me explain why.

In my view there is no doubt at all that the world is facing some pretty serious challenges. Climate change is the most widely discussed, but population growth, urbanisation, deforestation, biodiversity collapse, energy security, inequality and oceanic environmental degradation for example are serious challenges too in my view (some may be more serious than climate change actually). To help us to deal with these issues we need the best research undertaken by the best researchers in the optimum locations. The clock is ticking (loudly), we cannot be complacent. Geographers undoubtedly have a substantial part to play - which other discipline covers all of the above issues? Therefore, the RGS-IBG, as one of the premier Geography organisations, must take a lead. I think that the society is so doing, and am proud of my (very small) role in this.

Unfortunately, the amount of resource that the RGS-IBG has at its disposal is comparatively small. A key question therefore is what is the best way for the Society to use that resource to achieve the necessary aims? My view is that it is to direct comparatively small amounts of money at very high quality research that addresses fundamental issues (and by fundamental I mean key issues conceptually or in terms of influence). This occurs at almost every level - substantial shares go to undergraduate groups, to postgraduates, to early career researchers and to established researchers. The funds are generally used to "leverage" (dreadful word) other funds to great effect. The funding is thus both efficient and effective.

Other pots support more adventurous activities. The Land Rover sponsored Go Beyomd bursary for example provides funding and a Land Rover Defender to allow non-scientific expeditions (my own car is a Defender - there is no better vehicle for this).

Unfortunately, I just cannot see how the large-scale, multi-researcher but single location expedition approach can achieve what the current approach achieves. Almost any location is ideal for only a small range of contemporary problems. What is good for climate change (e.g. the high latitudes) does not facilitate research in another (e.g. urbanisation), meaning that the RGS-IBG would have to balance one threat against another. The current approach allows the Society to adress a wide range of issues in a wide range of environments by all levels of researchers, and still to produice world class research. The RGS-IBG should be proud of this - it is a remarkable achievement.

The newspaper articles supporting the Beagle Campaign (of which there were many) gave the impression that both models are possible - i.e. the RGS-IBG could organise both its own expeditions and support smaller-scale field research projects. Superficially this is attractive, but I think one must be realistic. The available pot of funding to support the Society is inevitably at best static, and possibly contracting, in the current economic climate. I can find no reasons to believe that in this context the RGS-IBG would be able to maintain its existing activities and support large-scale expeditions. I might be wrong, but to try to do so would be immensely risky.

Therefore I believe that the current policy is the right one. However, I recognise that the planned review may recommend a change policy - and that once all the factors have been taken into account then would be the right way to go. The review will undoubtedly be thorough and balanced, and it will then be up to Council to decide on the best way forward once the recommendations have been made. Given that there are elections for places on Council next month - and one of the positions being contested is the one that I currently hold - there are plenty of opportunities to influence this policy.

Your comments are welcome.

Dave Petley

Hong Kong,

20th May 2009

You may wonder why I am posting on this issue on a landslide blog? Well, I am an Honorary Secretary of the RGS-IBG, and thus am a member of the Council. In the months leading up to the vote I have been careful not to comment in any public forum on the issue - I felt that in the interests of fairness it was best to remain silent (whilst encouraging all Fellows, regardless of their views of the resolution, to vote) - but now that the vote is over I will explain why I was opposed to the resolution. I write only from absolutely personal perspective - I was in Hong Kong on the day of the vote and have been here since. I have not discussed the vote with any other Council members, and I most certainly do not write on behalf of the Society.

First and foremost, the RGS-IBG has a long and proud tradition of fieldwork. I am a great advocate of field research - my early career was to a large degree built upon it - and I strongly believe that there is a great deal to learn from fieldwork that cannot be learnt from lab studies or from models. The key question therefore is not whether field research should be undertaken (every member of the Council is resolute about the importance of field research), but how should it be organised and funded. I guess in a sense this is where the Beagle Campaign followers and I disagree. They argue, passionately and impressively, that it is best undertaken through large, reasonably long-term expeditions. I argue that it is best undertaken through larger numbers of smaller scale, focused studies. Let me explain why.

In my view there is no doubt at all that the world is facing some pretty serious challenges. Climate change is the most widely discussed, but population growth, urbanisation, deforestation, biodiversity collapse, energy security, inequality and oceanic environmental degradation for example are serious challenges too in my view (some may be more serious than climate change actually). To help us to deal with these issues we need the best research undertaken by the best researchers in the optimum locations. The clock is ticking (loudly), we cannot be complacent. Geographers undoubtedly have a substantial part to play - which other discipline covers all of the above issues? Therefore, the RGS-IBG, as one of the premier Geography organisations, must take a lead. I think that the society is so doing, and am proud of my (very small) role in this.

Unfortunately, the amount of resource that the RGS-IBG has at its disposal is comparatively small. A key question therefore is what is the best way for the Society to use that resource to achieve the necessary aims? My view is that it is to direct comparatively small amounts of money at very high quality research that addresses fundamental issues (and by fundamental I mean key issues conceptually or in terms of influence). This occurs at almost every level - substantial shares go to undergraduate groups, to postgraduates, to early career researchers and to established researchers. The funds are generally used to "leverage" (dreadful word) other funds to great effect. The funding is thus both efficient and effective.

Other pots support more adventurous activities. The Land Rover sponsored Go Beyomd bursary for example provides funding and a Land Rover Defender to allow non-scientific expeditions (my own car is a Defender - there is no better vehicle for this).

Unfortunately, I just cannot see how the large-scale, multi-researcher but single location expedition approach can achieve what the current approach achieves. Almost any location is ideal for only a small range of contemporary problems. What is good for climate change (e.g. the high latitudes) does not facilitate research in another (e.g. urbanisation), meaning that the RGS-IBG would have to balance one threat against another. The current approach allows the Society to adress a wide range of issues in a wide range of environments by all levels of researchers, and still to produice world class research. The RGS-IBG should be proud of this - it is a remarkable achievement.

The newspaper articles supporting the Beagle Campaign (of which there were many) gave the impression that both models are possible - i.e. the RGS-IBG could organise both its own expeditions and support smaller-scale field research projects. Superficially this is attractive, but I think one must be realistic. The available pot of funding to support the Society is inevitably at best static, and possibly contracting, in the current economic climate. I can find no reasons to believe that in this context the RGS-IBG would be able to maintain its existing activities and support large-scale expeditions. I might be wrong, but to try to do so would be immensely risky.

Therefore I believe that the current policy is the right one. However, I recognise that the planned review may recommend a change policy - and that once all the factors have been taken into account then would be the right way to go. The review will undoubtedly be thorough and balanced, and it will then be up to Council to decide on the best way forward once the recommendations have been made. Given that there are elections for places on Council next month - and one of the positions being contested is the one that I currently hold - there are plenty of opportunities to influence this policy.

Your comments are welcome.

Dave Petley

Hong Kong,

20th May 2009

Friday, March 6, 2009

The role of landslides in global warming

A rather extraordinary paper has just been published in Geophysical Research Letters about landslides triggered by the Wenchuan (Sichuan) earthquake. Why is it extraordinary - well, let me quote from the abstract. The paper suggests that the landslides caused destruction of vegetation such that "the cumulative CO2 release to the atmosphere over the coming decades is comparable to that caused by hurricane Katrina 2005 (~105 Tg) and equivalent to ~2% of current annual carbon emissions from global fossil fuel combustion."

Wow! In case you are struggling to decode the above, this suggests that the landslides triggered by the earthquake caused a massive loss of vegetation that will now decay. In decaying it will release CO2, which will add to the effects of global warming. This is a pretty interesting result - and it has already been picked up by the mainstream media.

So, how do the authors reach these remarkable conclusions, and are they valid? Well, I am afraid that I have some serious doubts about this study, which seem to be based on some misunderstandings of earthquake-induced landslides. Lets base the analysis on Fig 2 of the paper, reproduced below, in which the authors highlight one of the landslides that blocked the valley on the river upstream of Beichuan:

So why do I object so strongly to the paper? Well first, the use of terminology is inexcusably weak. For example, the authors describe the landslides thus (referring to Fig 2a): " (a) Living carbon scars left by mudslides, which indicates the geographical locations of the landslides for this region." NO - these are not mudslides - these are clearly shallow rockslides - a very different beast. Of Figure 2b they say "A quake surface wave triggered basal sliding that initiated the movement through liquefying the top ~2 m slab." NO. Failure was not due to liquefaction, and even the most cursory view of the image shows that more than 2 m of material was displaced. Finally, they say of Fig. 2d "An aerial photo taken on May 26, 2008, showing the landslide mud that formed the Tangjiashan quake lake". No again - this is most definitely not mud (see image below) - it is bouldery / fragmented debris (if it was mud then the problems would have been far less serious). They say that there model suggests that "The material reaches a maximum speed of 5 m s−1 but only briefly because the resistance stress is strong for the still coherent sliding material. " Again, this is poppycock. 5 m/sec is 18 km/hour - there is no way that this failure was as slow as that - look at how the debris fragmented and at how it spread across the valley (see image of the landslide deposit being excavated for the drainage channel below):

This is not a deposit that was emplaced at 5 m/sec, and nor is it mud. Pretty poor stuff, frankly. Note finally that figs 2b and 2d are supposed to represent the same area. However, in 2d the debris is clearly in the valley floor, with the source being the slopes above. In 2d the debris is above the 1155 m contour line. There is no debris between 1100 m and 1155 m - so the deposit areas are completely different.

This is not a deposit that was emplaced at 5 m/sec, and nor is it mud. Pretty poor stuff, frankly. Note finally that figs 2b and 2d are supposed to represent the same area. However, in 2d the debris is clearly in the valley floor, with the source being the slopes above. In 2d the debris is above the 1155 m contour line. There is no debris between 1100 m and 1155 m - so the deposit areas are completely different.So now lets turn to the modelling. The paper is ridiculously short of proper detail of what they have actually done - I cannot understand how the editors/referees let this through. It states that they have used an "advanced modeling tool—a scalable and extensible geo-fluid model—that explicitly accounts for soil mechanics, vegetation transpiration and root mechanical reinforcement, and relevant hydrological processes. The model considers non-local dynamic balance of the three dimensional topography, soil thickness profile, basal conditions, and vegetation coverage ... in determining the prognostic fields of the driving and resistive forces, and describes the flow fields and the dynamic evolution of thickness profiles of the medium considered, be it granular or plastic."

Hmmm! Not sure what this means really. However, they do state that "we need to use the finest possible digital elevation model (DEM) and soil profile data". However, they have actually used the SRTM data-set, which has a spatial resolution of 30 metres at best, and possibly 90 metres (!). I cannot believe that this is anything like good enough. Where velocity exceeded 1m/s in their model they assume that vegetation is destroyed. They have used this to determine the total amount of vegetation lost, and then calculated the contribution of the CO2 to the atmosphere.

There are several problems with this. First, the landslide model appears to be erroneous, as described above. Second, they seem to omit to include the uptake of CO2 by vegetation as it re-establishes on the slide scars, which will in the long term balance that emitted. Finally, note that they say 2% of CO2 emitted by burning fossil fuels, not 2% of all anthropogenic sources. This makes the contribution sound larger than it actually is. Indeed, 2% of annual anthropogenic emissions spread over a substantial period (it doesn't say how long) indicates a comparatively minor annual total.

In my view the basis of the paper is iffy, although it would have helped if the methodology had been properly outlined. Unfortunately, the work is already being picked up the climate change denier community. Read this and weep. The logic used by Paul Fuhr in this opinion piece makes no sense at all to me, but the fact that he can use this paper in this way is deeply unfortunate, providing yet more ammunition for the pseudo-science community of climate change deniers.

Reference

Diandong Ren, Jiahu Wang, Rong Fu, David J. Karoly, Yang Hong, Lance M. Leslie, Congbin Fu, Gang Huang (2009). Mudslide-caused ecosystem degradation following Wenchuan earthquake 2008 Geophysical Research Letters, 36 (5) DOI: 10.1029/2008GL036702

Friday, January 9, 2009

Future British seasonal precipitation extremes - implications for landslides

This week an important paper has been published by Fowler and Ekstrom (2009), which seeks to look at the likely changes to very intense rainfall events in the UK. Helen Fowler, is based just up the road from me at Newcastle (the city with the chronically under-performing football team), and her co-author have used modelling ensembles to examine how UK precipitation regimes are likely to change in the time period 2070 to 2100 under the SRES A2 emissions scenario, which is currently effectively our best estimate as to how carbon dioxide emissions will change with time (Fig. 1).

Fig 1: SRES Emissions Scenarios. A2, as used in this study, is shown in Fig. (b). Source: http://www.grida.no/publications/other/ipcc_sr/?src=/Climate/ipcc/emission/014.htm

Fig 1: SRES Emissions Scenarios. A2, as used in this study, is shown in Fig. (b). Source: http://www.grida.no/publications/other/ipcc_sr/?src=/Climate/ipcc/emission/014.htmEnsemble modelling looks at the results of a series of different climate models to examine the range of outputs. Each model operates in a slightly different way, meaning that there will always be a range of results. Therefore, papers presenting ensemble model outcomes always present a range. One of the key issues of interest is whether there is some consistency between them. In this study. Of course the results of such modelling runs are highly complex - in this paper the authors have looked at the 1 day and 10 day precipitation events with a current return period of 25 years. The 1 day event can be thought of as the impact of an intense storm; the 10 day probably simulates a series of low pressure systems tracking across the country, as has happened several times in the last few of years. In landslide terms the 1 day storms might trigger the catastrophic debris flow and sallow failure events, whilst the 10 day events might trigger deeper seated and large slope failures.

First the model is run for the a control period (1961-1990) to check that they can realistically simulate observed conditions. They can. The models are then run to look at what would happen in the period between 2070 and 2100, and the results are then pooled using a fairly interesting approach. Well, the first thing to say is that the Global Climate Models (GCMs) do predict a much warmer climate - global mean temperatures are predicted to be 3.1 to 3.56 degrees warmer than at present. Interestingly though the occurrence of these intense rainfall events also greatly increases for three of the four seasons:

Winter: Increases in occurrence of extreme precipitation of 5 to 30%

Spring: Increases in occurrence of extreme precipitation of 10 to 25%

Summer: Very varied results, with some models suggesting decreases and other increases. More work is needed

Autumn (Fall): Increases in occurrence of extreme precipitation of 5 to 25%

A few of the models do predict larger (and smaller) increases - look at the paper for the full detail. Overall, the authors conclude that "Nevertheless, importantly for policy makers, the multi-model ensembles of change project increases in extreme precipitation for most UK regions in winter, spring and autumn. This change is physically consistent with warmer air in the future climate being able to hold more moisture. The use of multi-day extremes and return periods also showed that short-duration extreme precipitation is projected to increase more than longer-duration extreme precipitation, where the latter is associated with narrower uncertainty ranges."

The implications for landslides are stark. Increases on this level of the occurrence of extreme precipitation events will inevitably increase the occurrence of slope failures. Therefore, we should expect to see an increase in the occurrence of slope failures. Unfortunately, as landslides are triggered by just a small proportion of our existing rainstorm events, increases in this range are likely to have a disproportionate impact.

Of course the next thing to do will be to build the outputs of these models into slope stability models. This will be a fascinating exercise.

Reference:

H. J. Fowler, M. Ekström (2009). Multi-model ensemble estimates of climate change impacts on UK seasonal precipitation extremes International Journal of Climatology DOI: 10.1002/joc.1827

Thursday, December 18, 2008

Spatial patterns of deaths from natural hazards in the US

There is a very interesting paper in press in the International Journal of Health Geographics on the spatial patterns of mortality (deaths) from Natural Hazards in the United States. The paper, entitled "Spatial Patterns of Natural Hazards Mortality in the United States" by Borden and Cutter is in pre-print form but can be downloaded as a PDF here. First up, lets be clear that the authors are reputable - Susan Cutter in particular has made a massive contribution to our understanding of the social impacts of natural hazards and, unlike many working in this field, she has managed to keep her materials sensible, balanced and approachable.

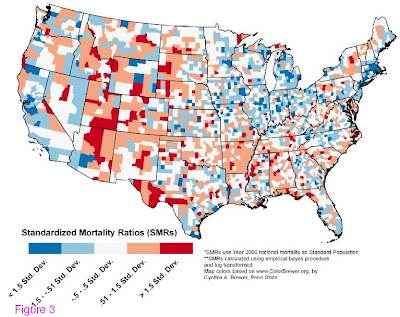

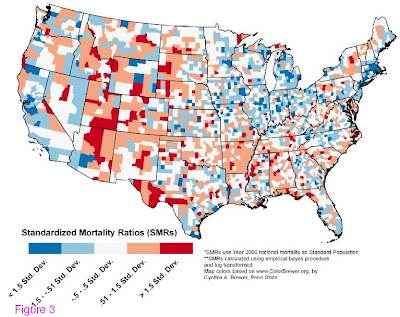

The paper looks at the spatial pattern of mortality from hazards using an improved dataset orginally based upon the Spatial Hazard Event and Loss Database (SHELDUS), which is available at http://sheldus.org), covering the period 1970-2004. Much of the paper relates to the reliability of the data and the quality of the database, but there are also some interesting outcomes in terms of the spatial distribution and also the cause of death.

First, the spatial distribution is perhaps not what one would expect (Fig. 1). Intuitively one might say that the highest level of mortailty would be on the west coast given the earthquake hazard, but in fact the map shows no clear spatial pattern, with pockets of high mortality occurring across the whole of the country. There is a slighlty higher level of mortality in the midwest than in the east, reflecting the pattern if temperature extremes perhaps.

Figure 1: The map of the spatial distribution of mortality across the USA for the period 1970-2004. This map is Fig. 3 from Borden and Cutter (2009) (Biomed Central Ltd).

Figure 1: The map of the spatial distribution of mortality across the USA for the period 1970-2004. This map is Fig. 3 from Borden and Cutter (2009) (Biomed Central Ltd).

The reason for this is that there is a vast range of causes of death in natural hazards in the USA, with temperature-related deaths (either from heat or cold) dominating.

So what of landslides? Well, the combined datasets indicate 170 landslide fatalities out of a total of 19,958, which is 0.85% of the total. In comparison, geophysical hazards (presumably earthquakes and volcanoes) provide 302 deaths (1.5% of the total).

One of the things that the study highlights, although does not really discuss explicitly, the key importance of time in such a study, bearing in mind the low frequency but high magnitude nature of natural hazards. For instance, the time period does not include the occurrence of Hurricane Mitch in 2005 - I suspect that the map might look a little different if the >1800 fatalities in this event were included. Similarly, the map does not include a very large earthquake in California or on the Cascadia subduction zone. Therefore, great care must be taken in the use of such data to evaluate risk rather than impact.

The paper finishes by acknowledging that much work is needed on this type of analysis. The paper presented here is a very useful step along the way.

Reference

Borden, K.A. and Cutter, S.L. 2008 in press. Spatial Patterns of Natural Hazards Mortality in the United States. International Journal of Health Geographics, 7:64. doi:10.1186/1476-072X-7-64 .

The paper looks at the spatial pattern of mortality from hazards using an improved dataset orginally based upon the Spatial Hazard Event and Loss Database (SHELDUS), which is available at http://sheldus.org), covering the period 1970-2004. Much of the paper relates to the reliability of the data and the quality of the database, but there are also some interesting outcomes in terms of the spatial distribution and also the cause of death.

First, the spatial distribution is perhaps not what one would expect (Fig. 1). Intuitively one might say that the highest level of mortailty would be on the west coast given the earthquake hazard, but in fact the map shows no clear spatial pattern, with pockets of high mortality occurring across the whole of the country. There is a slighlty higher level of mortality in the midwest than in the east, reflecting the pattern if temperature extremes perhaps.

Figure 1: The map of the spatial distribution of mortality across the USA for the period 1970-2004. This map is Fig. 3 from Borden and Cutter (2009) (Biomed Central Ltd).

Figure 1: The map of the spatial distribution of mortality across the USA for the period 1970-2004. This map is Fig. 3 from Borden and Cutter (2009) (Biomed Central Ltd). The reason for this is that there is a vast range of causes of death in natural hazards in the USA, with temperature-related deaths (either from heat or cold) dominating.

So what of landslides? Well, the combined datasets indicate 170 landslide fatalities out of a total of 19,958, which is 0.85% of the total. In comparison, geophysical hazards (presumably earthquakes and volcanoes) provide 302 deaths (1.5% of the total).

One of the things that the study highlights, although does not really discuss explicitly, the key importance of time in such a study, bearing in mind the low frequency but high magnitude nature of natural hazards. For instance, the time period does not include the occurrence of Hurricane Mitch in 2005 - I suspect that the map might look a little different if the >1800 fatalities in this event were included. Similarly, the map does not include a very large earthquake in California or on the Cascadia subduction zone. Therefore, great care must be taken in the use of such data to evaluate risk rather than impact.

The paper finishes by acknowledging that much work is needed on this type of analysis. The paper presented here is a very useful step along the way.

Reference

Borden, K.A. and Cutter, S.L. 2008 in press. Spatial Patterns of Natural Hazards Mortality in the United States. International Journal of Health Geographics, 7:64. doi:10.1186/1476-072X-7-64 .

Thursday, September 18, 2008

Global warming and landslide occurrence

One of the most vexed questions in landslide science at the moment is that of the potential link between climate change and mass movement occurrence. I have yet to meet a landslide researcher who does not believe in the reality of anthropogenic global warming, so we are all deeply interested in how our particular systems are likely to respond. Unfortunately this is not an easy question to answer for three reasons:

One of the most vexed questions in landslide science at the moment is that of the potential link between climate change and mass movement occurrence. I have yet to meet a landslide researcher who does not believe in the reality of anthropogenic global warming, so we are all deeply interested in how our particular systems are likely to respond. Unfortunately this is not an easy question to answer for three reasons:- Landslides respond to changes in pore pressure (i.e. groundwater level). Groundwater level is controlled by precipitation input and by evapotranspiration outputs. So, to know how groundwater will respond requires quantification of both of these parameters. It might be expected that in a warmer world on average precipitation will increase (see below), but evapotranspiration will also increase. Understanding the balance between these parameters is at best a challenge.

- Landslides are localised phenomena, usually sited in upland areas in which rainfall patterns are complex and variable. Unfortunately, global climate models work at much larger spatial scales (typically 1 or 1.5 degrees of latitude and longitude). This makes it difficult to scale the outputs to an individual landslide.

- In many parts of the world, precipitation is controlled by large-scale weather systems, such as ENSO and the Asian SW monsoon. Climate models are struggling to model these systems adequately.

Allan, R.P. and B.J. Soden, 2008. Atmospheric warming and the amplification of precipitation extremes. Science, 321 (5895), 1481-1483. You can download a copy from Brian Soden's website.

This paper is interesting because it looks at extreme precipitation in the context of climate change. In the last couple of years it has become clear that many of the most damaging landslide events tend to occur as a result of precipitation extremes - i.e. comparatively short duration, high intensity rainfall (the type associated with a particular storm or front) rather than long duration, lower intensity events. Understanding how extreme precipitation will change is thus very helpful.

Allen and Soden have started from the observation that the GCMs all forecast that extreme precipitation events will become more common as the climate warms. They have used a combination of measurements of daily precipitation over the tropical ocean using a NASA satellite instrument called SSM/I and the outputs from global climate models to look at the response of tropical precipitation events to natural changes in surface temperature and atmospheric moisture content. In the context of landslides, a very clear link was observed. In periods when temperatures were high the number of observed extreme rainfall events increased, and vice-versa. What is surprising though is that the response of the natural system seems to be more extreme than that of the climate models - i.e. the climate models are too conservative in terms of their forecasts of these extreme events.

The relevance of these findings for landslides should be quite clear. Increases in the occurrence of extreme precipitation intensities might well be expected to increase the occurrence of landslides. It should be noted though that there is some way to go to really establish this link. For example, this paper is essentially based on a dataset collected over the ocean. There is a need to see whether the same applies on land. However, it is good to see papers being produced that start to answer the questions that we are asking.

Wednesday, June 11, 2008

Deaths and research in landslides

Now that the Tangjiashan crisis is over, it is clearly time to move on to other things. I will post

a retrospective on that event when the dust has settled. For now I think we should allow the Chinese to bask in the success of their achievement.

To change the topic, I thought it would be interesting to post an analysis that I did a couple of years ago. The analysis was simple but quite fun - I looked at the landslide fatality database for 2003 and 2004 by calculating the proportion of fatalities by large geographical area. I then looked at the field areas of the research presented in the Springer journal Landslides and in papers presented at a selection of international landslide conferences, and worked out the proportions as per the fatalities.

Now ideally there should be some relationship between the locations of landslide deaths and the locations of landslide research, one would hope. Care is needed as:

1. One year of landslide data might not be representative; and

2. The sample of the areas of research might not be representative.

However, the plot does show how far we are from an equitable situation:

In my simplistic world I would hope that most areas plot close to the central line on the graph. In most cases this is clearly not so. The regions with really serious landslide problems in this period - Central America, SE. Asia and S. Asia has a tiny proportion of the research. Regions that dominated the research into landslides - North America and Europe - had very few landslide fatalities. Only East Asia looks to be in the right place amongst those regions with large numbers of both research projects and fatalities.

Two things emerge:

1. Care is needed because in 2004 landslides in Haiti caused huge problems, which mean that for this period Central America dominates the statistics. If we were to take the period 2002-2006 East and South Asia would dominate the fatality count.

2. Only E. Asia appears to have the balance right between research and fatalities. This reflects the large landslide programmes in China, Japan, Hong Kong and Taiwan.

Of course the picture might look different if this were to be examined through the lens of economic losses rather than fatalities, but not I suspect if we were to normalise by GDP or per capita income. All of which serves to highlight the fact that the landslide research community should shift its focus if it really wants to make a difference by saving lives.

I will try to do a better analysis of the full dataset over the summer, but thought it would be interesting to share this initial analysis.

a retrospective on that event when the dust has settled. For now I think we should allow the Chinese to bask in the success of their achievement.

To change the topic, I thought it would be interesting to post an analysis that I did a couple of years ago. The analysis was simple but quite fun - I looked at the landslide fatality database for 2003 and 2004 by calculating the proportion of fatalities by large geographical area. I then looked at the field areas of the research presented in the Springer journal Landslides and in papers presented at a selection of international landslide conferences, and worked out the proportions as per the fatalities.

Now ideally there should be some relationship between the locations of landslide deaths and the locations of landslide research, one would hope. Care is needed as:

1. One year of landslide data might not be representative; and

2. The sample of the areas of research might not be representative.

However, the plot does show how far we are from an equitable situation:

In my simplistic world I would hope that most areas plot close to the central line on the graph. In most cases this is clearly not so. The regions with really serious landslide problems in this period - Central America, SE. Asia and S. Asia has a tiny proportion of the research. Regions that dominated the research into landslides - North America and Europe - had very few landslide fatalities. Only East Asia looks to be in the right place amongst those regions with large numbers of both research projects and fatalities.

Two things emerge:

1. Care is needed because in 2004 landslides in Haiti caused huge problems, which mean that for this period Central America dominates the statistics. If we were to take the period 2002-2006 East and South Asia would dominate the fatality count.

2. Only E. Asia appears to have the balance right between research and fatalities. This reflects the large landslide programmes in China, Japan, Hong Kong and Taiwan.

Of course the picture might look different if this were to be examined through the lens of economic losses rather than fatalities, but not I suspect if we were to normalise by GDP or per capita income. All of which serves to highlight the fact that the landslide research community should shift its focus if it really wants to make a difference by saving lives.

I will try to do a better analysis of the full dataset over the summer, but thought it would be interesting to share this initial analysis.

Subscribe to:

Posts (Atom)